DSA Counting Sort Time Complexity

See this page for a general explanation of what time complexity is.

Counting Sort Time Complexity

Counting Sort works by first counting the occurrence of different values, and then uses that to recreate the array in a sorted order.

As a rule of thumb, the Counting Sort algorithm runs fast when the range of possible values \(k\) is smaller than the number of values \(n\).

To represent the time complexity with Big O notation we need to first count the number of operations the algorithm does:

- Finding the maximum value: Every value must be evaluated once to find out if it is the maximum value, so \(n\) operations are needed.

- Initializing the counting array: With \(k\) as the maximum value in the array, we need \(k+1\) elements in the counting array to include 0. Every element in the counting array must be initialized, so \(k+1\) operations are needed.

- Every value we want to sort is counted once, then removed, so 2 operations per count, \(2 \cdot n\) operations in total.

- Building the sorted array: Create \(n\) elements in the sorted array: \(n\) operations.

In total we get:

\[ \begin{equation} \begin{aligned} Operations {} & = n + (k+1) + (2 \cdot n) + n \\ & = 4 \cdot n + k + 1 \\ & \approx 4 \cdot n + k \end{aligned} \end{equation} \]

Based on what have seen about time complexity earlier, we can create a simplified expression using Big O notiation to represent time complexity:

\[ \begin{equation} \begin{aligned} O(4 \cdot n + k) {} & = O(4 \cdot n) + O(k) \\ & = O(n) + O(k) \\ & = \underline{\underline{O(n+k)}} \end{aligned} \end{equation} \]

It has already been mentioned that Counting Sort is effective when the range of different values \(k\) is relatively small compared to the total number of values to be sorted \(n\). We can now see this directly from the Big O expression \(O(n+k)\).

Just imagine for example that the range of different numbers \(k\) is 10 times as large as the number of the values to be sorted. In such a case we can see that the algorithm uses most of its time on handling the range of different numbers \(k\), although the actual number of values to be sorted \(n\) is small in comparison.

It is not straight forward to show the time complexity for Counting Sort in a graph, or to have a simulation for the time complexity as we have had for the previous algorithms, because the time complexity is so greatly affected by the range of values \(k\).

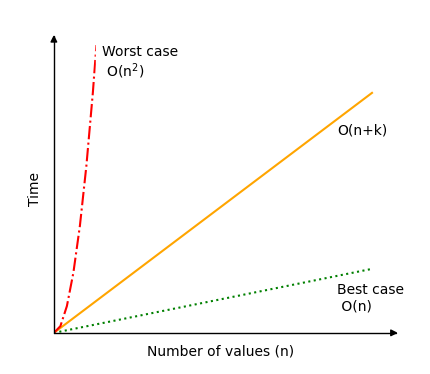

Below is a plot that shows how much the time complexity for Counting Sort can vary, followed by an explanation for the best and worst case scenarios.

The best case scenario for Counting Sort would be to have the range \(k\) just a fraction of \(n\), let's say \(k(n)=0.1 \cdot n\). As an example of this, for 100 values, the range would be from 0 to 10, or for 1000 values the range would be from 0 to 100. In this case we get time complexity \(O(n+k)=O(n+0.1 \cdot n) = O(1.1 \cdot n)\) which is simplified to \(O(n)\).

The worst case however would be if the range is a lot larger than the input. Let's say for an input of just 10 values the the range is between 0 and 100, or similarly, for an input of 1000 values, the range is between 0 and 1000000. In such a scenario, the growth of \(k\) is quadratic with respect to \(n\), like this: \(k(n)=n^2\), and we get time complexity \(O(n+k)=O(n+n^2)\) which is simplified to \(O(n^2)\). A case that is even worse than this could also be constructed, but this case is chosen because it is relatively easy to understand, and perhaps not that unrealistic either.

As you can see, it is important to consider the range of values compared to the number of values to be sorted before choosing Counting Sort as your algorithm. Also, as mentioned at the top of the page, keep in mind that Counting sort only works for non negative integer values.

Counting Sort Simulation

Run different simulations of Counting Sort to see how the number of operations falls between the worst case scenario \(O(n^2)\) (red line) and best case scenario \(O(n)\) (green line).

{{ this.userX }}

{{ this.userK }}

Operations: {{ operations }}

As mentioned previously: if the numbers to be sorted varies a lot in value (large \(k\)), and there are few numbers to sort (small \(n\)), the Counting Sort algorithm is not effective.

If we hold \(n\) and \(k\) fixed, the "Random", "Descending" and "Ascending" alternatives in the simulation above results in the same number of operations. This is because the same thing happens in all three cases: A counting array is set up, the numbers are counted, and the new sorted array is created.